Vertebrae joined Snap in 2021 where we got to continue to building and expanding upon what we started. Snap was establishing a new organization, AR enterprise services (ARES), focused on bringing their world renowned augmented reality technology outside the app, starting within e-commerce.

I got to continue designing AR experiences, my focus was AR try-ons, Image try-ons, 3D viewers and asset management. I also collaborated on a product fit prediction app as well as AR Mirrors for physical spaces. We also explored AI commerce integrations.

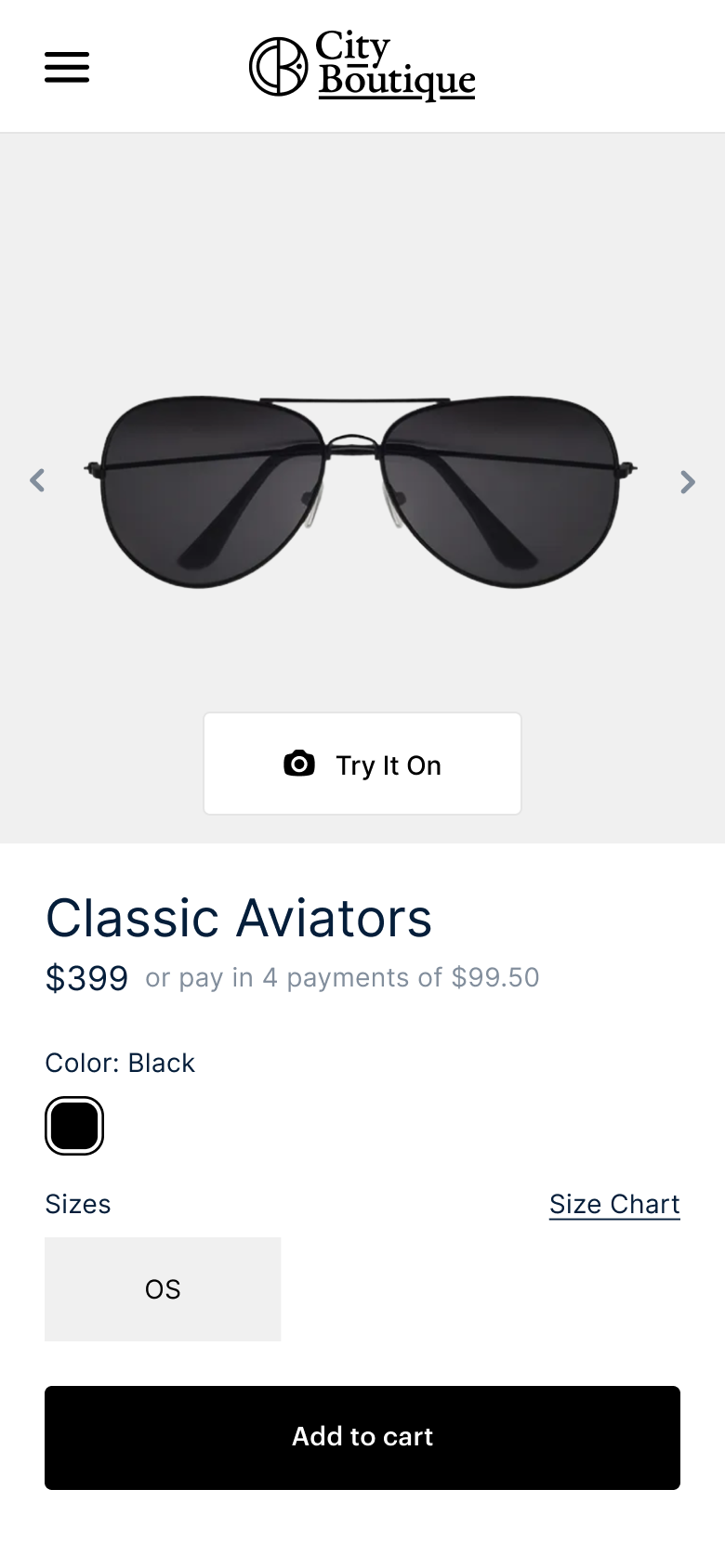

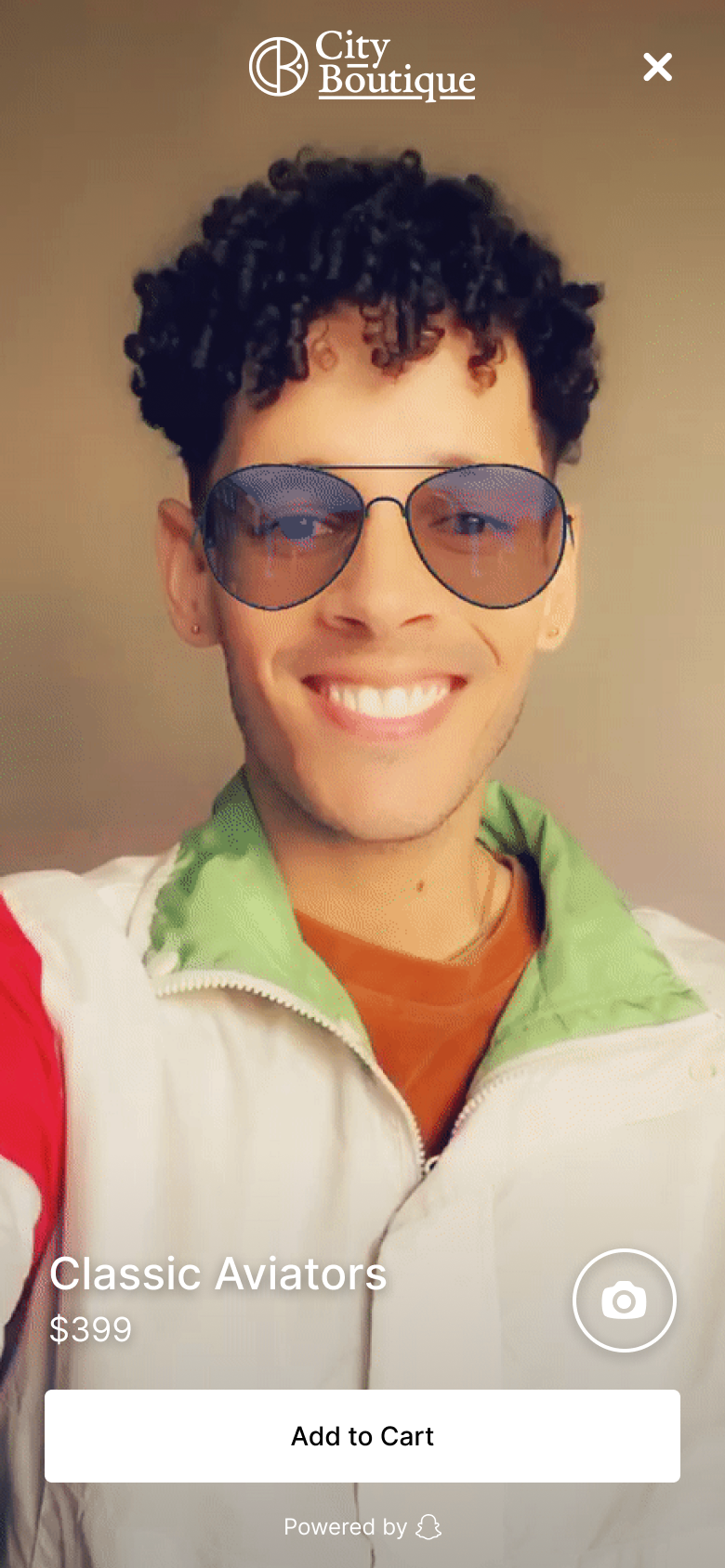

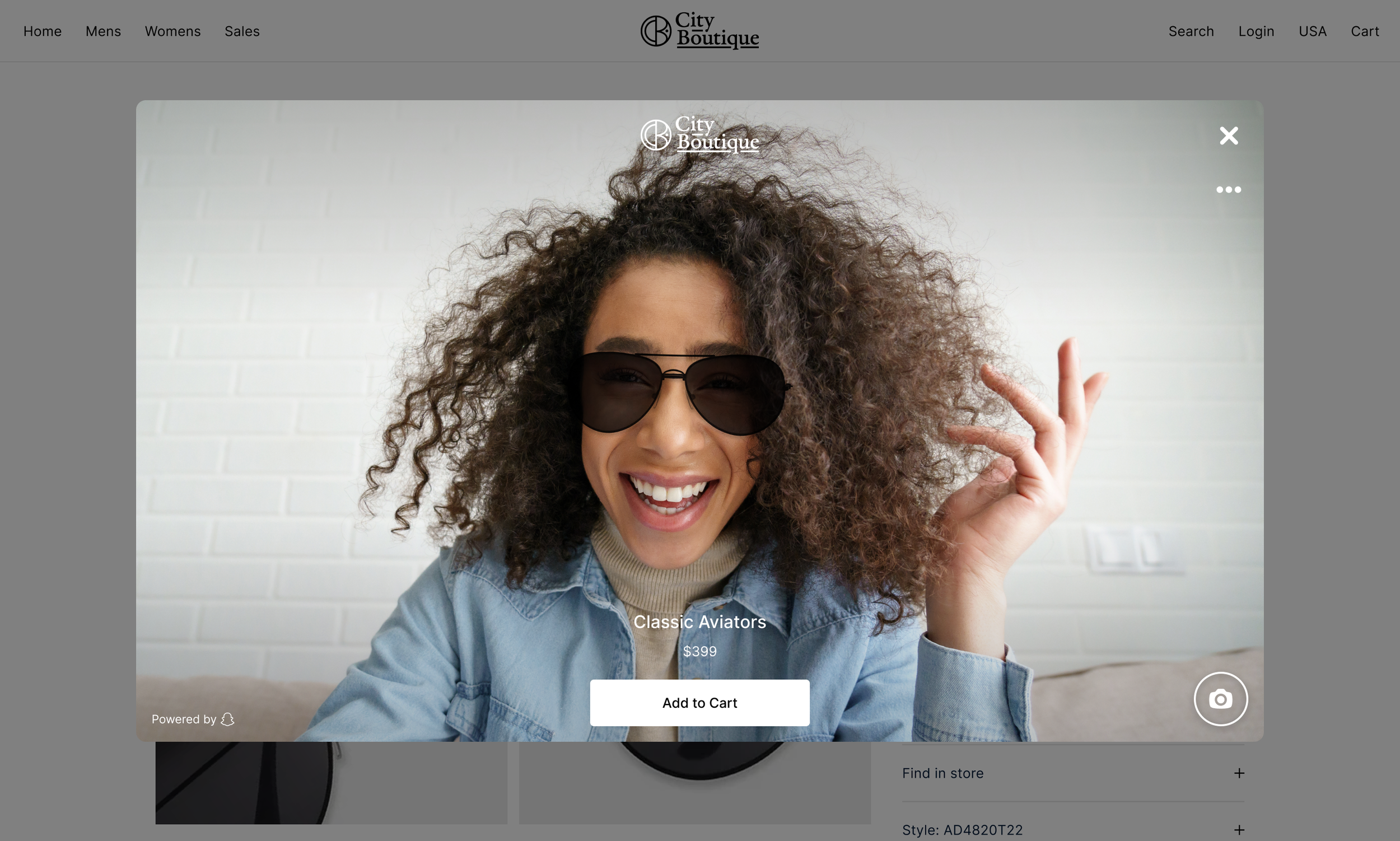

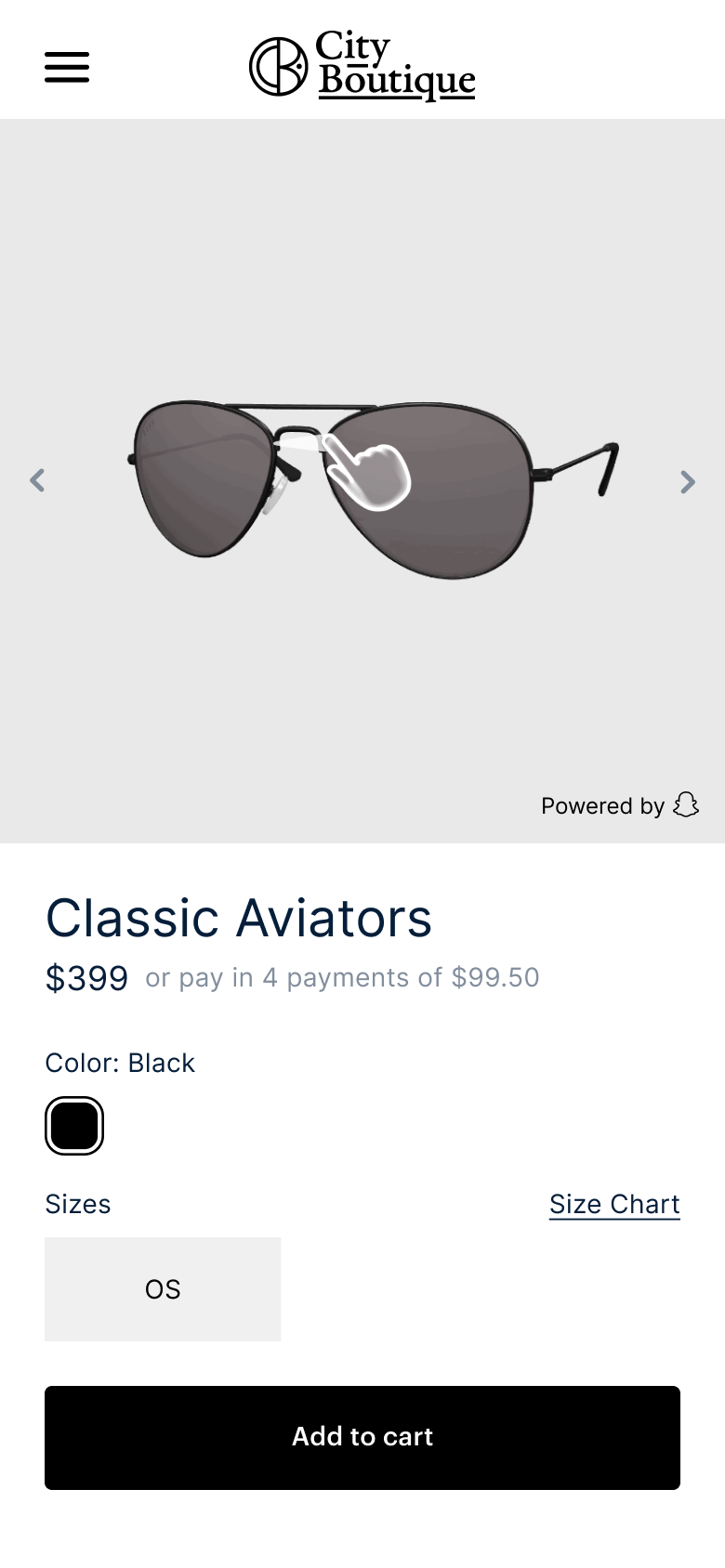

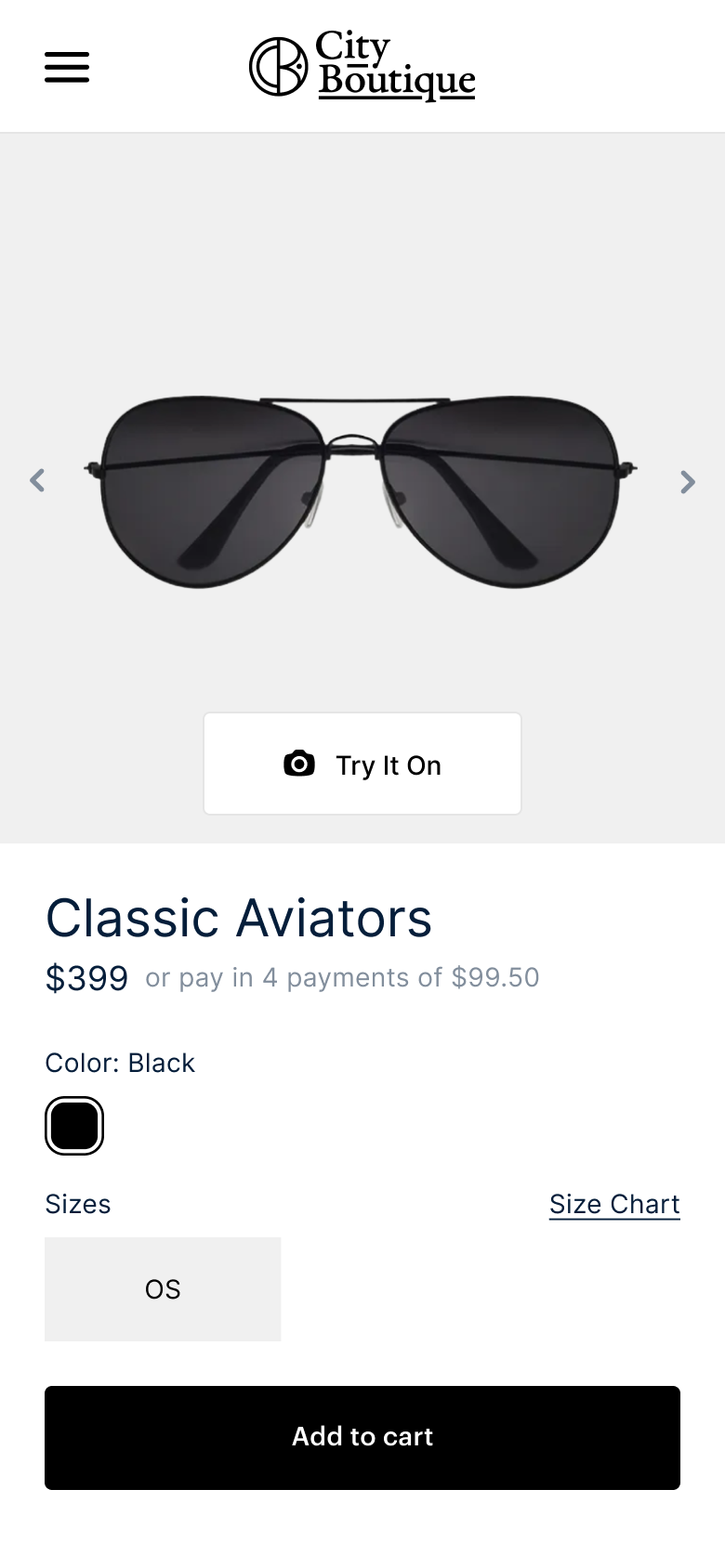

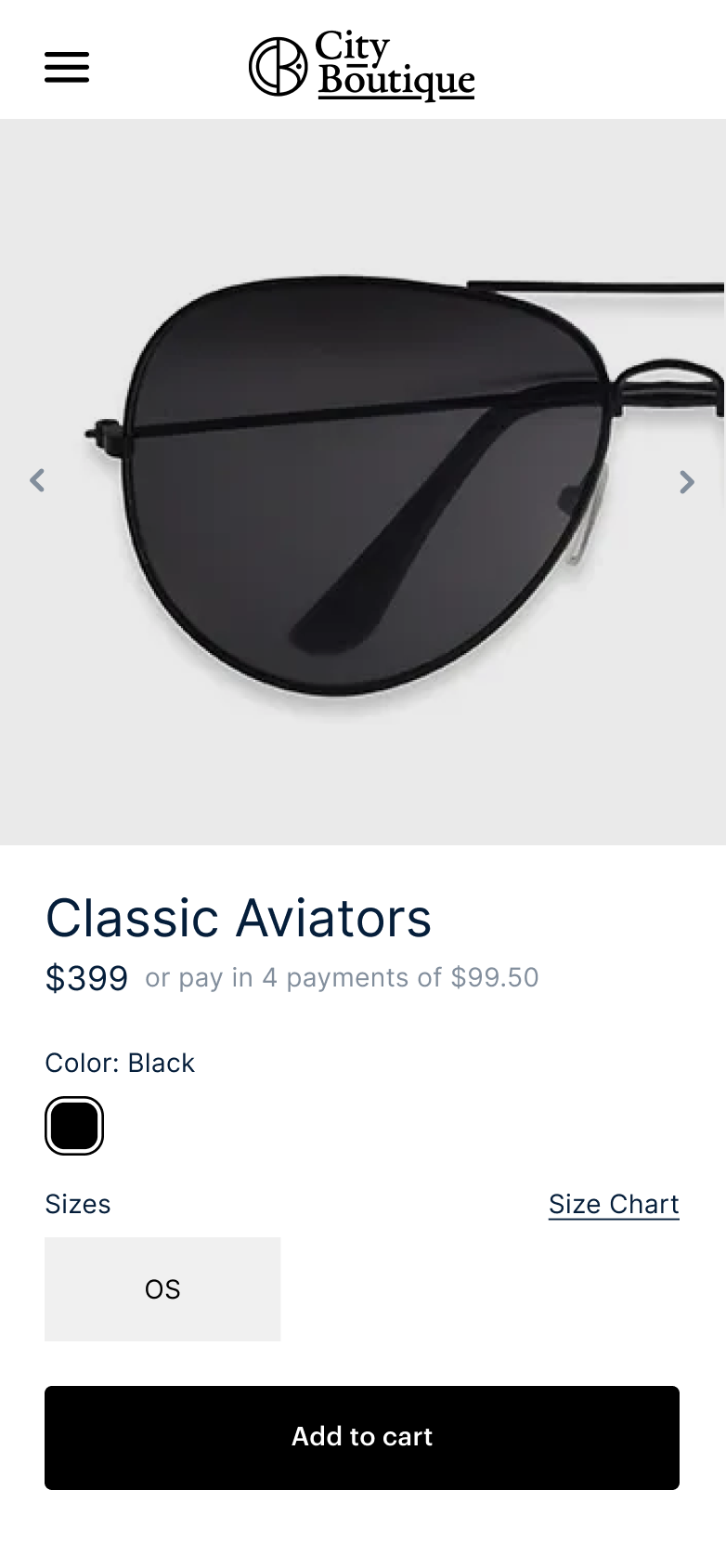

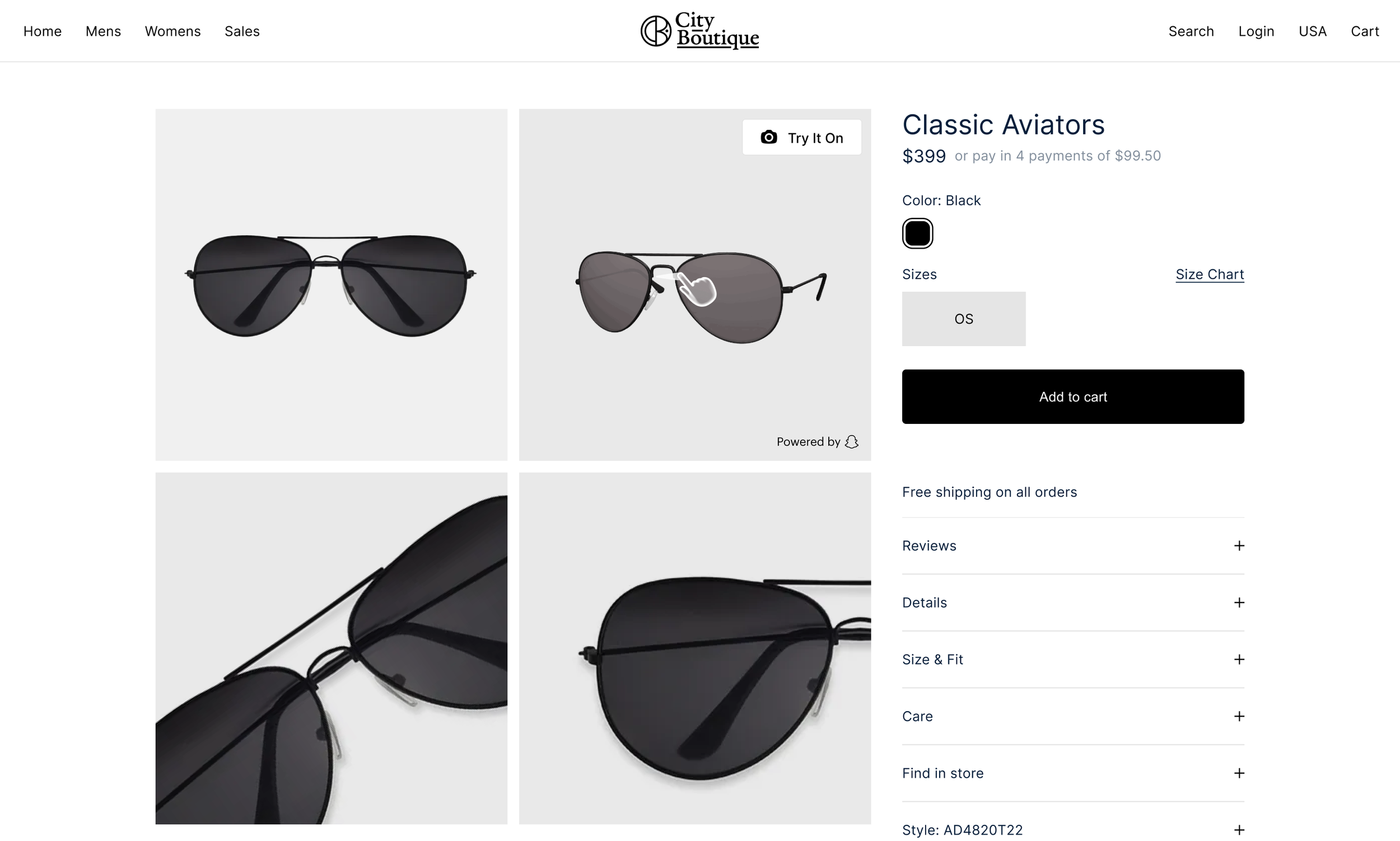

Live Try-On Eyewear

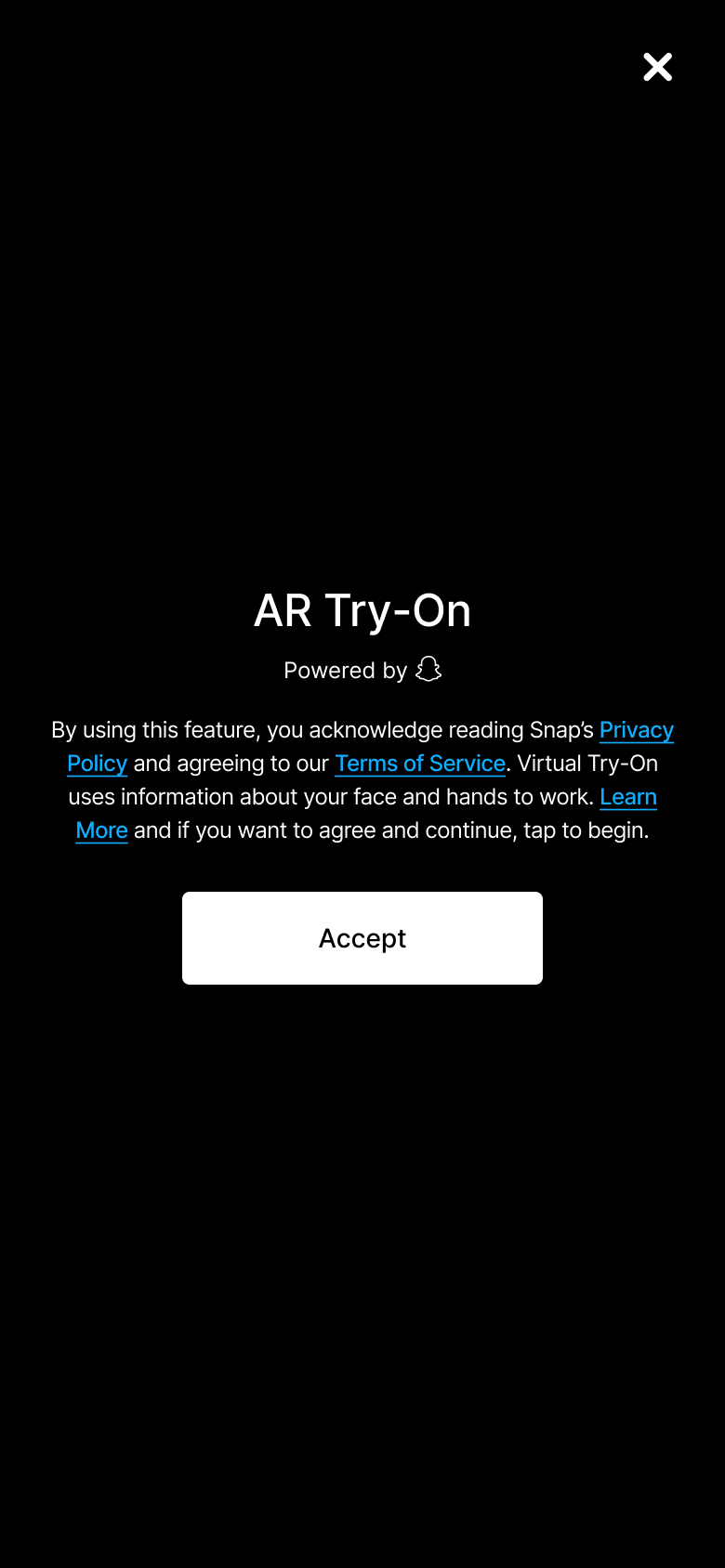

The goal was to make buying glasses online feel as natural and confident as trying them on in-store. Using Snap’s camera and real-time AR technology, we created a flow where users could open their front camera directly from a product page and see how different frames looked and fit in seconds.

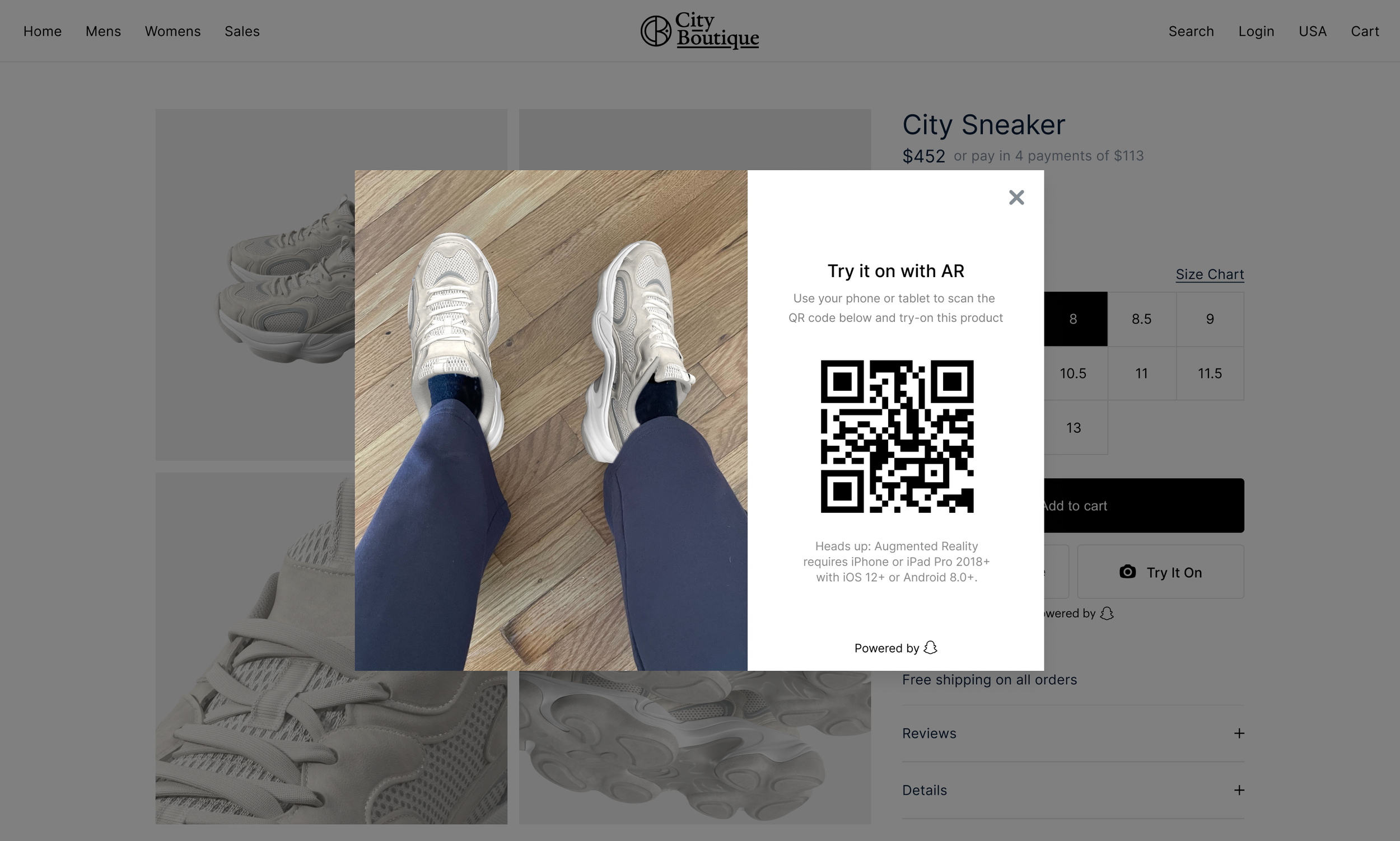

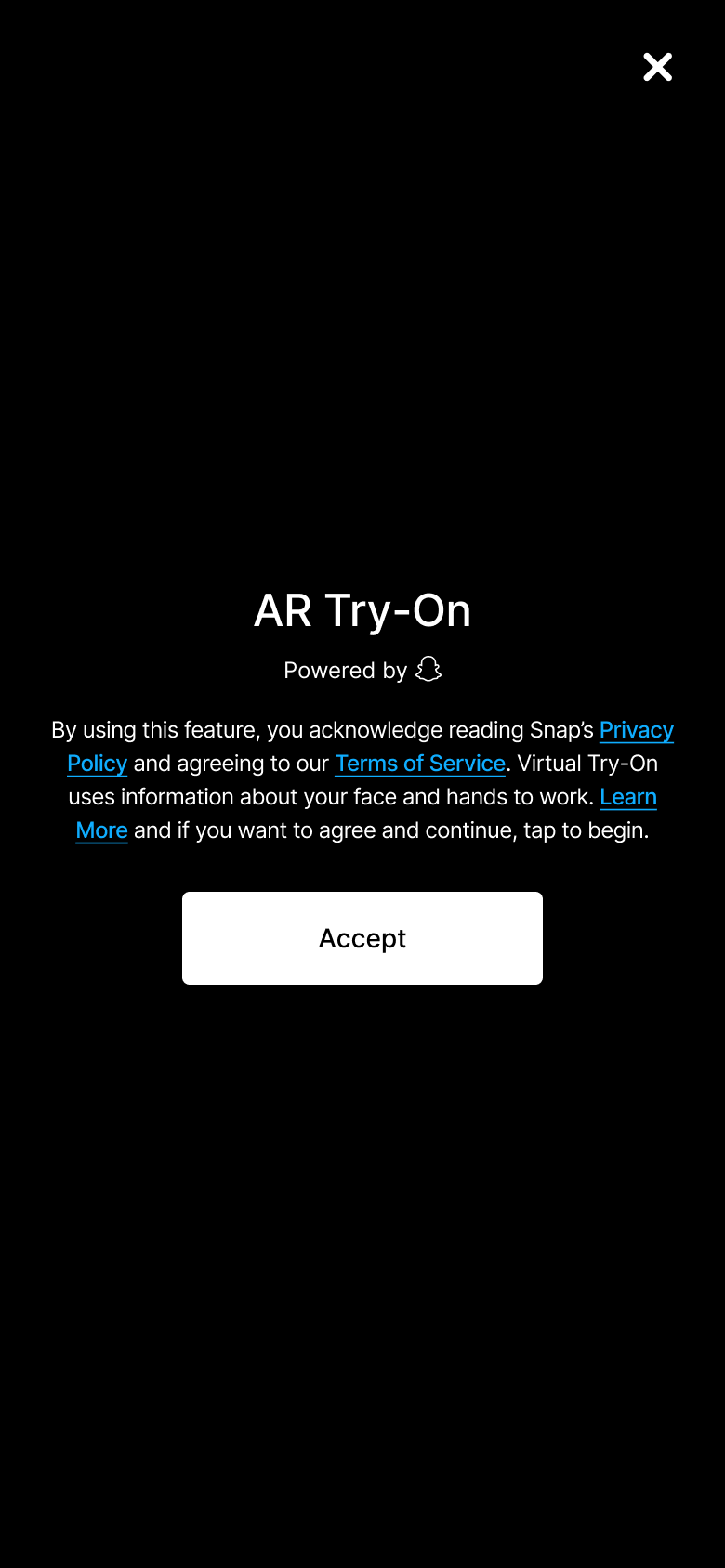

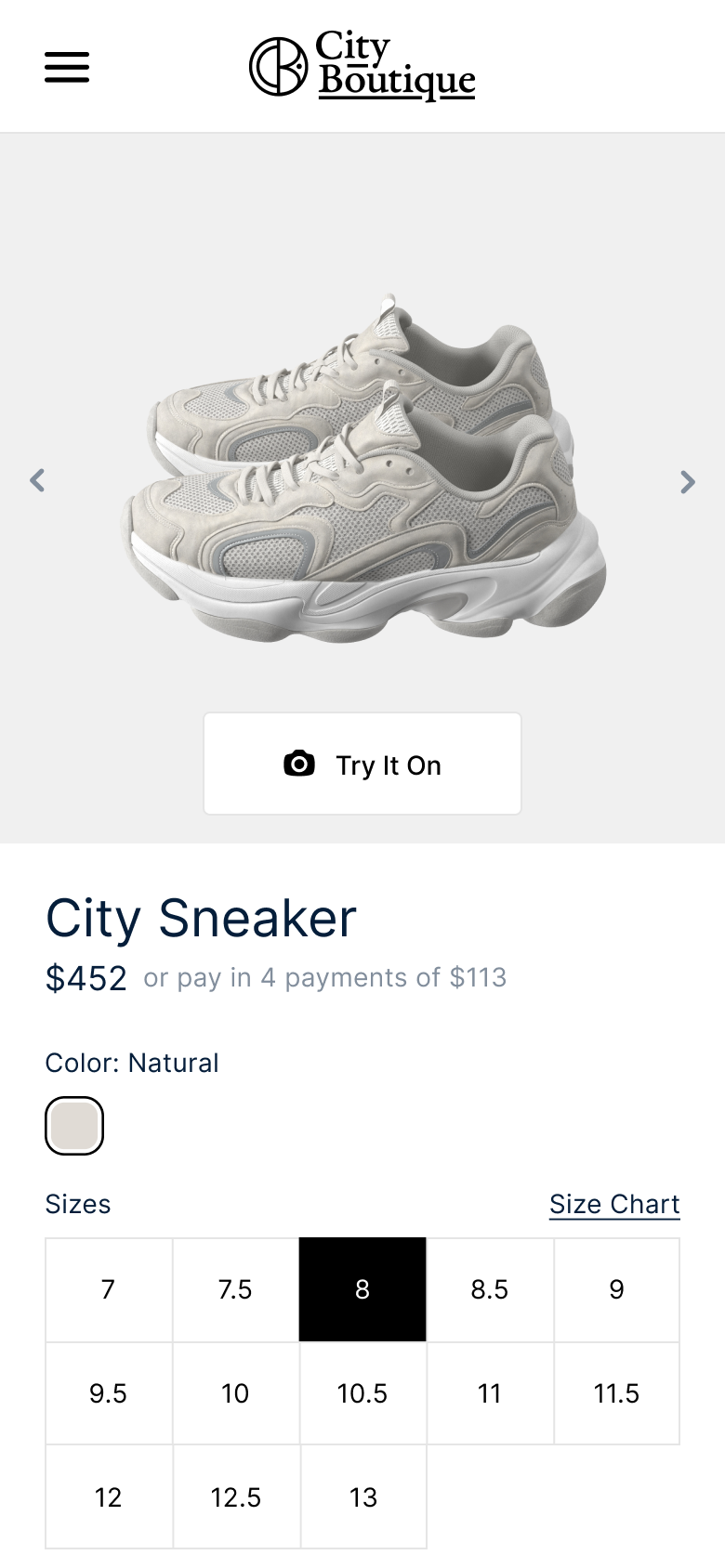

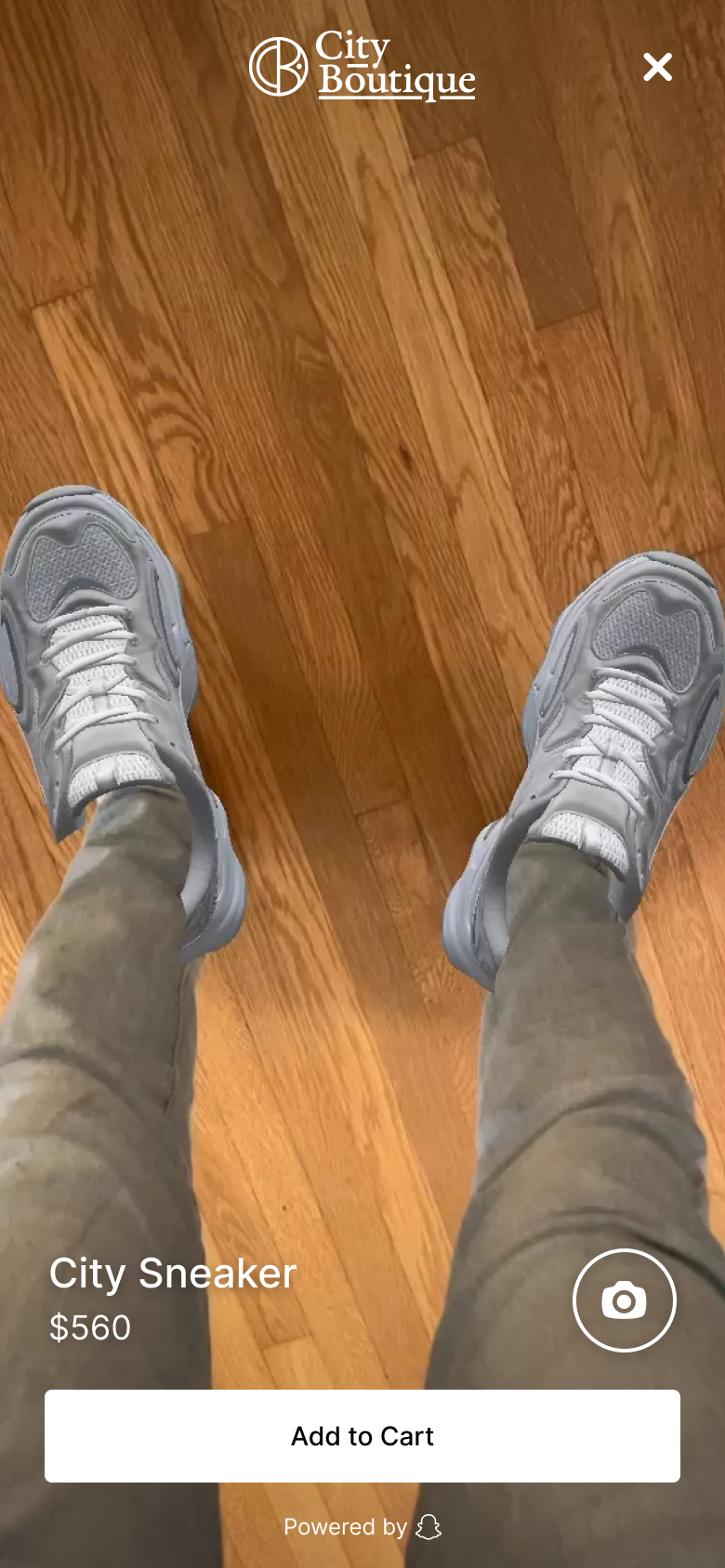

Live Try-On Footwear

Using the device’s back-facing camera, we built an experience that tracked users’ feet in real time and rendered shoes over them with realistic lighting, depth, and motion.

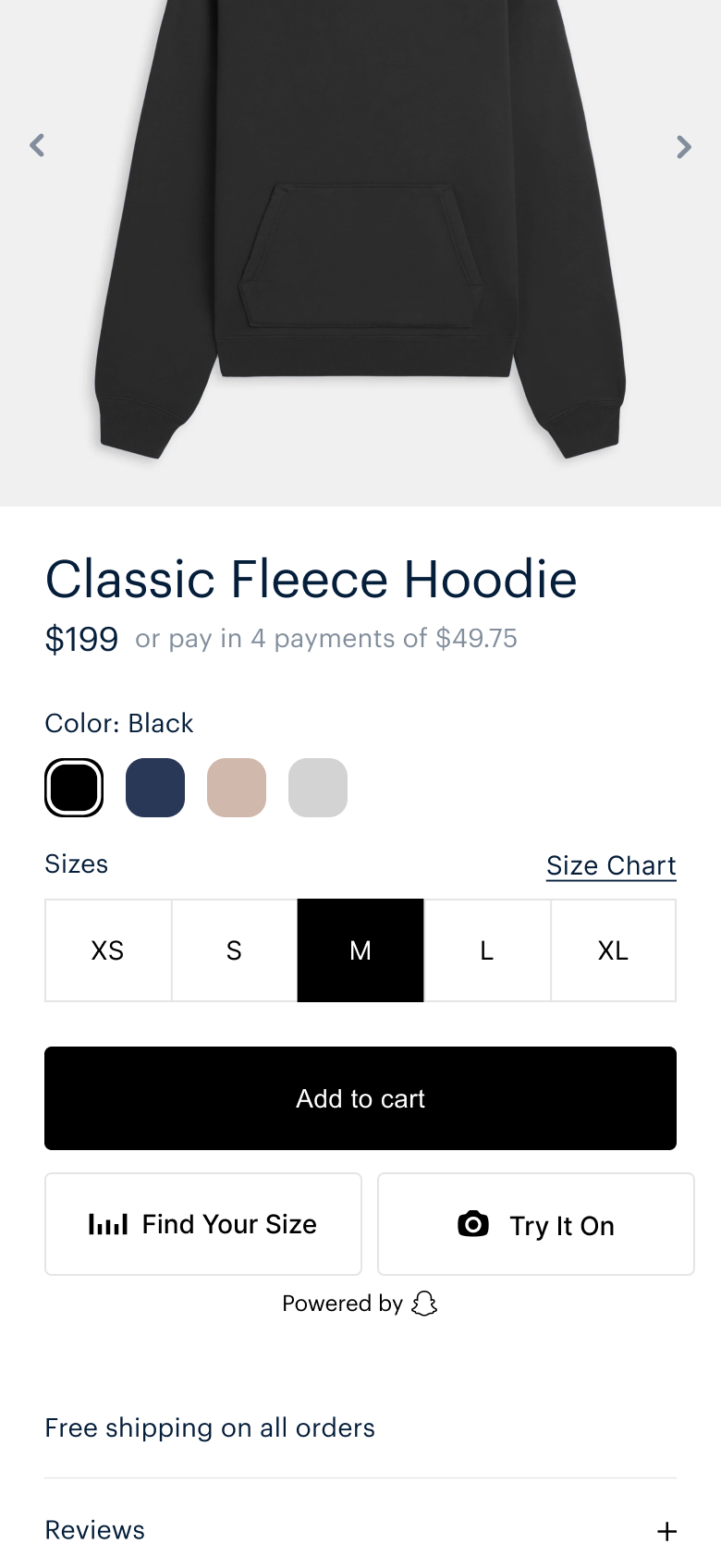

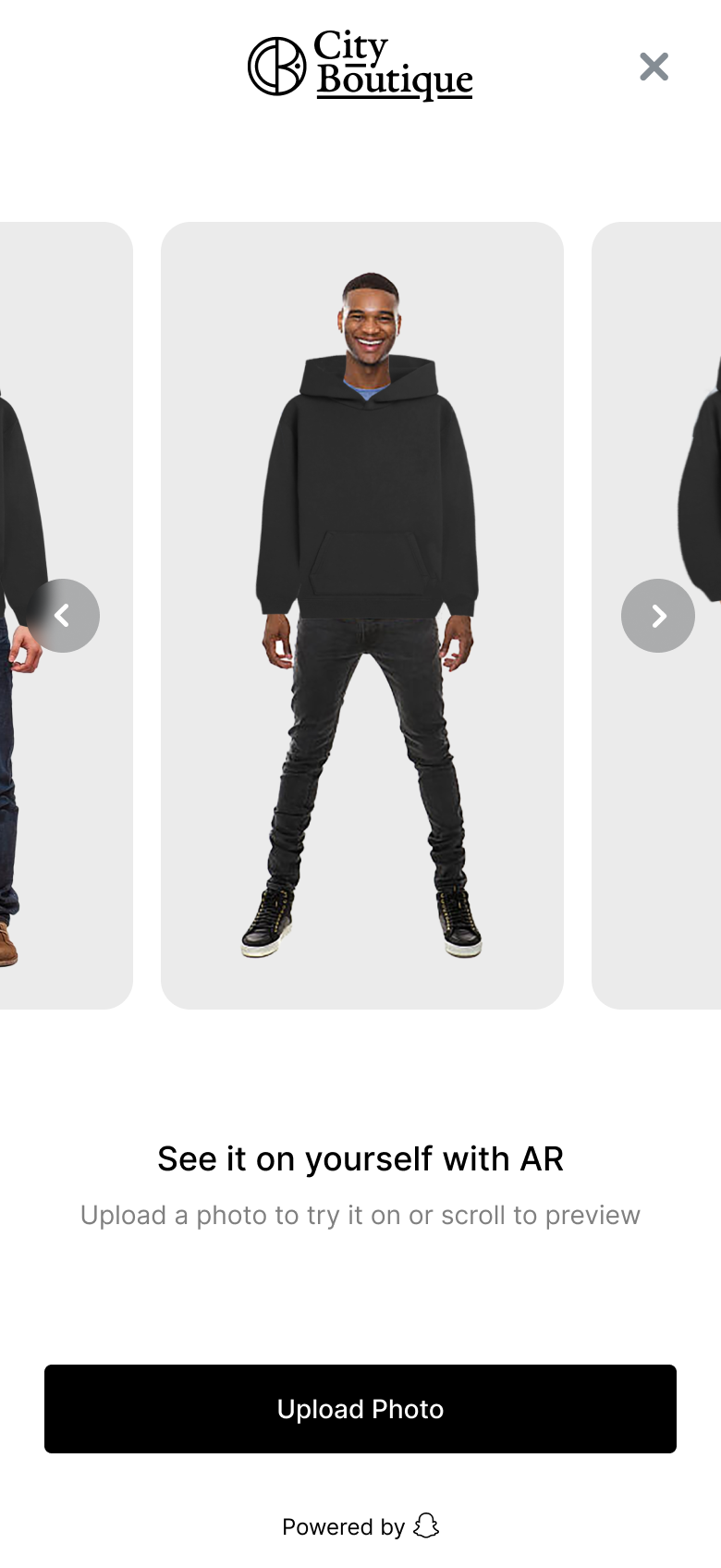

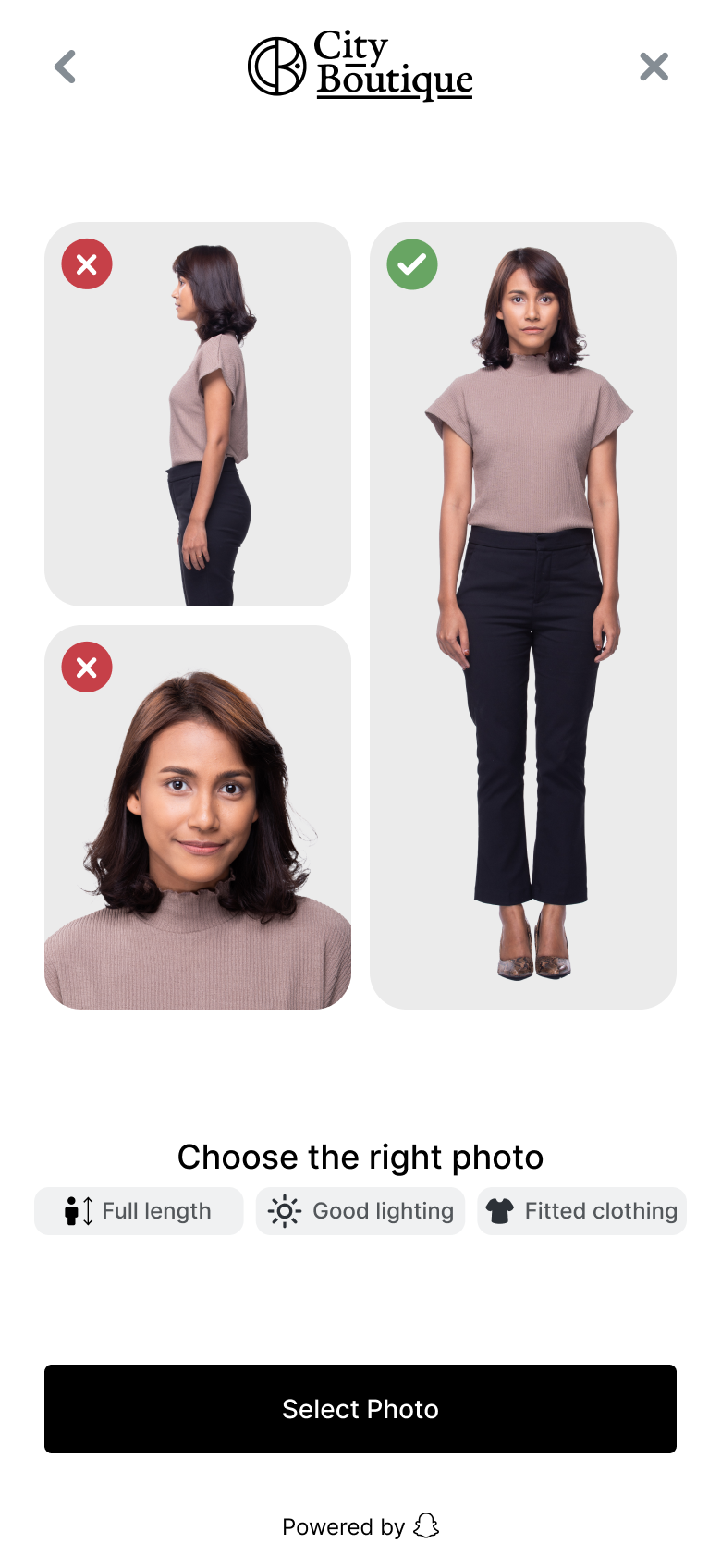

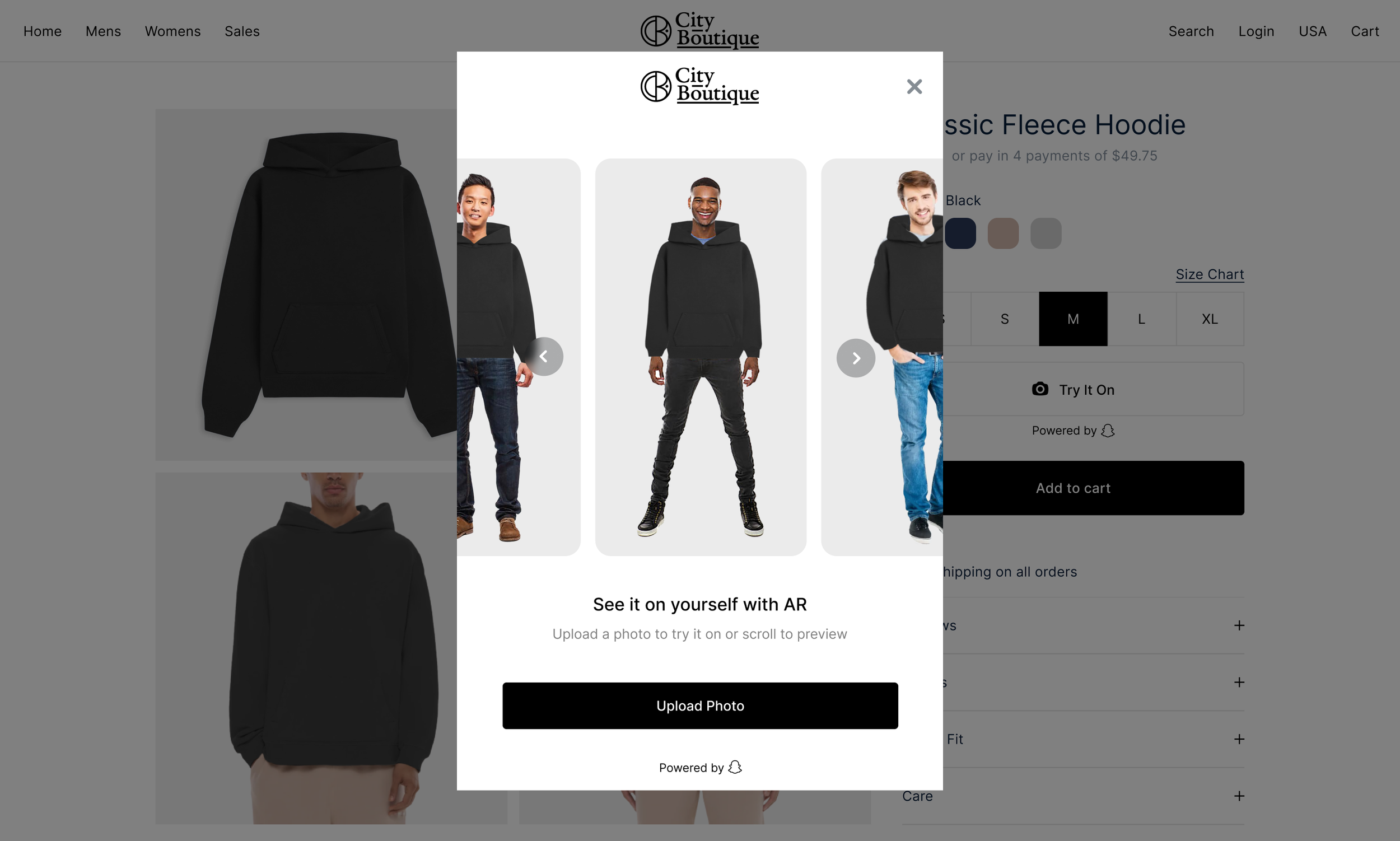

Image Try-On

Image Try-On explored full body image upload product try-ons. The feature allowed shoppers to upload a photo or select from avatar models to visualize how garments looked in context, directly on their own image.

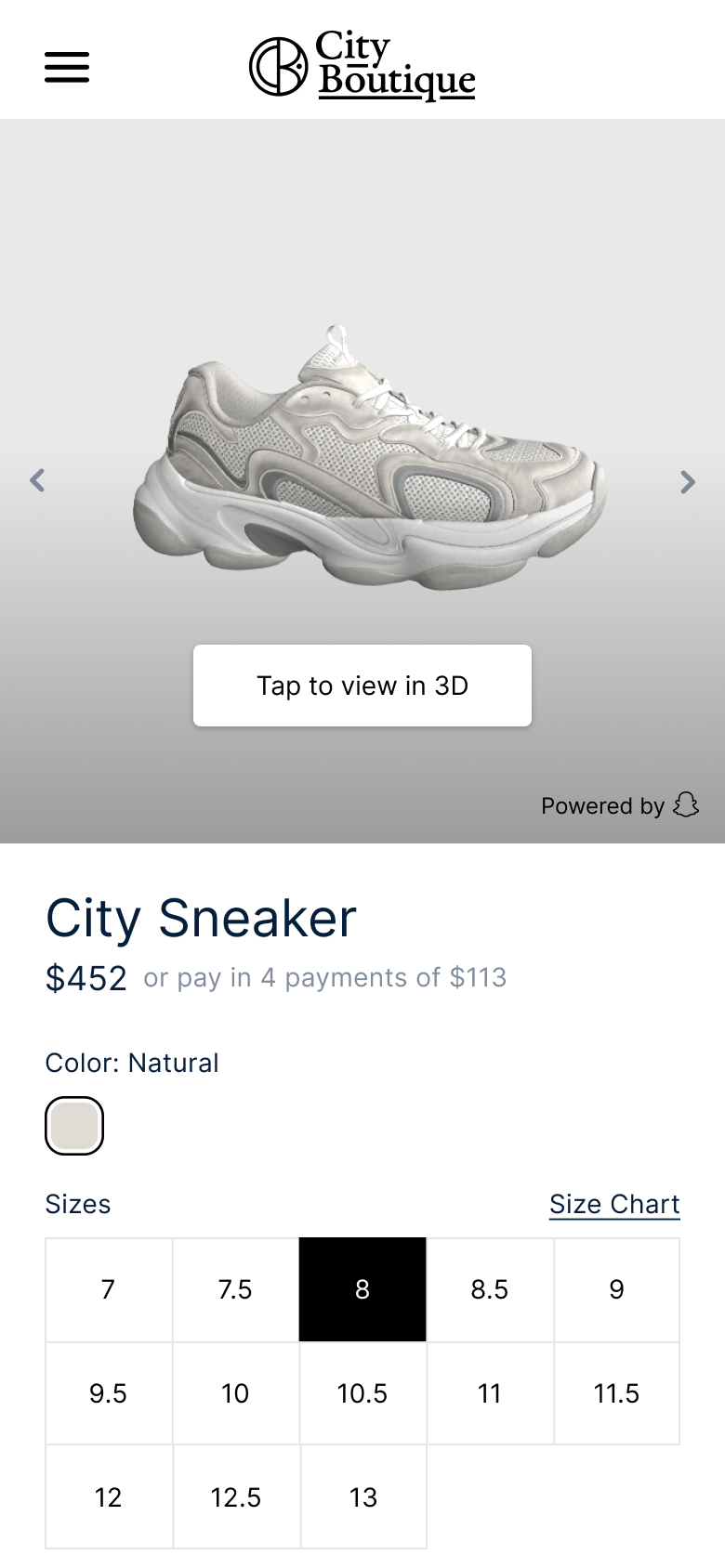

3D Viewer

The 3D Viewer allowed us to inject the same assets used for AR into the traditional e-commerce product image carousel. Customers could rotate, zoom, and inspect items in high detail from the stitching on a shoe to the texture of a handbag. Designed to integrate seamlessly within a merchant’s product page, it offered an intuitive, low-latency experience across devices and browsers.

Digital Asset Manager

The Digital Asset Manager served as the foundation for every experience we built. It allowed merchants to upload, store, and manage 3D and AR assets, then approve and publish them directly to their associated try-on or viewer integrations.